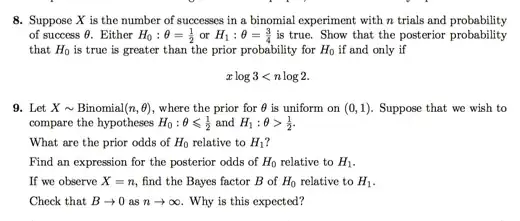

Let $p=\Pr(H_0)$ and $q = 1-p = \Pr(H_1)$ be the prior probabilities. The likelihood function is

\begin{align}

L(H_0\mid X=x) & = \binom n x \frac 1 {2^n} \\[12pt]

L(H_1\mid X=x) & = \binom n x \left(\frac 3 4\right)^x \left(\frac 1 4 \right)^{n-x}

\end{align}

$$

\frac{\Pr(H_0\mid X=x)}{\Pr(H_1\mid X=x)} = \frac{\Pr(H_0)}{\Pr(H_1)} \cdot \frac{L(H_0\mid X=x)}{L(H_1\mid X=x)} = \frac p q \cdot \frac{\dbinom n x \dfrac 1{2^n}}{\dbinom n x \left(\dfrac 3 4 \right)^x \left( \dfrac 1 4 \right)^{n-x}}

= \frac p q \cdot \frac{2^n}{3^x}.

$$

Then we have $\Pr(H_0\mid X=x)>\Pr(H_1\mid X=x)$ precisely if $\dfrac{2^n p}{3^x q} > 1$.

(The above is all done using odds rather than probablity. It's a bit simpler that way. With probabilities, we would need $\Pr(H_0\mid X=x)+\Pr(H_1\mid X=x)=1$, so we'd need the normalizing constant $c$ for which $c(2^n p + 3^x q) = 1$.)

If $\theta$ is uniformly distributed on $(0,1)$, then $\Pr(H_0) = \Pr(\theta \le 1/2) = 1/2$.

The prior probability distribution of $\theta$ let us denote by saying $\displaystyle \Pr(\theta\in A) = \int_A 1\,dt$ for $A\subseteq (0,1)$; thus the measure is $1\,dt$ on $(0,1)$. The likelihood is

$$

L(t\mid X=x) = \binom n x t^x (1-t)^{n-x}.

$$

Hence the posterior probability distribution of $\theta$ is

$$

c t^x (1-t)^{n-x}\cdot 1\,dt

$$

where the normalizing constant $c$ is chosen so that $\displaystyle\int_0^1 c t^x (1-t)^{n-x}\cdot 1\,dt = 1$. Integrating, we get

$$

\int_0^1 t^x(1-t)^{n-x}\cdot 1\,dt = \frac 1 {(n+1)\dbinom n x}.

$$

For probabilistic method of evaluating this integral, see this answer.

So we have

$$

\Pr(H_0\mid X=x) = (n+1)\binom n x \int_0^{1/2} t^x(1-t)^{n-x}\, dt

$$

and the posterior odds is

$$

\frac{\Pr(H_0\mid X=x)}{\Pr(H_1\mid X=x)} = \frac{(n+1)\binom n x \int_0^{1/2} t^x(1-t)^{n-x}\, dt}{(n+1)\binom n x \int_{1/2}^1 t^x(1-t)^{n-x}\, dt} = \frac{\int_0^{1/2} t^x(1-t)^{n-x}\, dt}{\int_{1/2}^1 t^x(1-t)^{n-x}\, dt}.

$$

I can expand the numerator as in the above comment, and cancel the P[H0]. Then do I divide the top and bottom by P[H0|X=x] to find the denominator is P[H0} + 1/Bayes Factor * P[H1] then substitute in the formulae for Bayes Factor and proceed? Is this the correct approach?

– J. Bant Mar 25 '16 at 23:09