I'll consider a general fixed-precision floating-point system, which includes any of the standard computer floating-point number types such as the single- or double-precision formats of the IEEE 754 standard.

But I will only consider the normal numbers within such a system, not the zeros, denormals, infinities, and other special cases.

A system of floating-point representation of this general type is described by four integers:

\begin{array}{cl}

b & \text{the base, also known as the radix,}\\

m & \text{the number of digits in the significand, also called the mantissa,}\\

E_\min & \text{the minimum value of the exponent,}\\

E_\max & \text{the maximum value of the exponent,}

\end{array}

where $b>1,$ $d>0,$ and $E_\max \geq E_\min.$

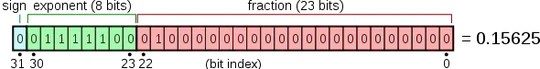

For example, the IEEE 754-2008 binary32 (single-precision) floating-point format illustrated in the question sets

$b=2,$ $m=24,$ $E_\min=-126,$ and $E_\max=127.$

Note that the most significant digit of the significand is always $1$ in this format, so it is omitted from the stored bits (only the other $23$ bits of the significand need to be stored), and this format reserves two of the possible $8$-bit exponent values for special cases, so that the exponents for normal numbers are stored as $8$-bit integers ranging from

$00000001_\mathrm{binary} = 1_\mathrm{decimal}$

to $11111110_\mathrm{binary} = 254_\mathrm{decimal}$;

adding the exponent bias $-127$ to these values determines the minimum

and maximum exponent values.

The value of a particular floating-point number in such a system is

$$ \sigma \times n \times b^{E-m+1}

= \sigma \times \left(\sum_{k=0}^{m-1} s_{-k}b^{-k}\right) \times b^E \tag1$$

where $\sigma \in \{-1,1\},$

$n$ is an $m$-digit integer in base $b$ representing the significand

(a most significant digit $s_0 \in \{1,\ldots,b-1\}$ followed by

$m-1$ less significant digits $s_{-1}$ through $s_{-(m-1)}$ selected from $\{0,\ldots,b-1\}$),

and $E$ is an integer such that $E_\min\leq E \leq E_\max.$

To convert a real number $x$ to the floating-point number system with parameters $b,$ $m,$ $E_\min,$ and $E_\max,$ proceed as follows:

Find $E$ such that $b^E \leq \lvert x\rvert < b^{E+1}.$

There are various ways to do this: take the integer part of

$\log_b \lvert x\rvert$ ;

multiply or divide $\lvert x\rvert$ by powers of $b$ in order to get a number in the range $[0, b)$ and count how many powers of $b$ were needed;

or take positive or negative powers of $b$ until you find one near enough to $\lvert x\rvert$.

If the result of the previous step does not satisfy $E_\min \leq E \leq E_\max,$ you have an exception (overflow or underflow) and must act according to however you have decided to deal with such exceptions.

But if the result satisfies $E_\min \leq E \leq E_\max,$

take $y = \lvert x\rvert \times b^{m-E-1}.$

Round $y$ to the nearest integer, $n,$

using whatever rounding rules you have selected.

For the IEEE-754 "round to nearest, ties to even" rule,

let $n = \left\lceil y - \frac12\right\rceil$ if $\lfloor y\rfloor$ is even,

$n = \left\lfloor y + \frac12\right\rfloor$ if $\lfloor y\rfloor$ is odd.

For "round to nearest, ties away from zero,"

let $n = \left\lfloor y + \frac12\right\rfloor.$

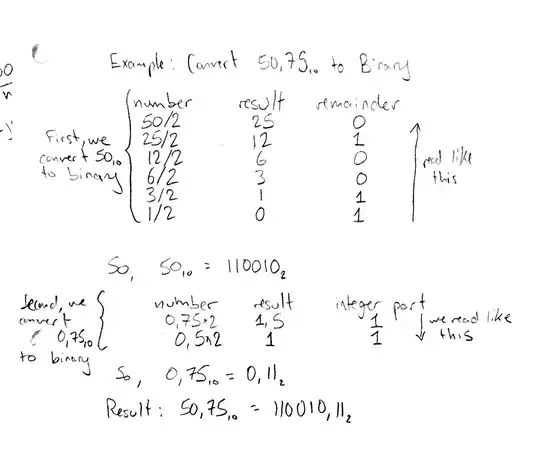

Convert $n$ to base $b$ using any of the usual methods for converting an integer to a base-$b$ representation.

The digits of this representation are $s_0, s_{-1}, \ldots, s_{-(m-1)}$

with $s_0$ the most significant digit.

Set $\sigma$ to the sign of $x.$

You now have all the parts of the floating-point value shown in Equation $1$.

To produce the bitwise representation of an IEEE 754 binary floating-point number as illustrated in the question,

set the sign bit to $0$ if $\sigma=1,$ $1$ if $\sigma=-1.$

Subtract the exponent bias from $E,$ write the result as an unsigned binary integer, and set the bits of the exponent to that value, padding on the left with zeros to fill the prescribed number of bits of the exponent.

Finally, set the bits of the "fraction" to the bits

$s_{-1},\ldots,s_{-(m-1)}.$

Example: Convert $3.14$ to the IEEE 754-2008 binary32 format.

We have $b=2,$ $m=24,$ $E_\min=-126,$ and $E_\max=127.$

We find that $b^1 = 2^1 \leq \lvert 3.14\rvert < 2^2 = b^2,$ so $E = 1.$

Since $E_\min \leq 1\leq E_\max,$ we set

$y = \lvert 3.14\rvert \times b^{m-E-1}

= \lvert 3.14\rvert\times 2^{22} = 13170114.56.$

Following either of the "round to nearest" rules, we get $n = 13170115.$

Converting this to binary, $n = 110010001111010111000011_\mathrm{binary}.$

Since $3.14$ is positive, we put $0$ in bit $31.$

The exponent bias for this format is $-127,$ so since $E=1$ and

$1 - (-127) = 128 = 10000000_\mathrm{binary},$

we put $10000000$ in bits $23$ through $30,$ inclusive.

Finally, we put $10010001111010111000011$ (obtained by removing the most significant bit of $n$) in bits $0$ through $22,$ inclusive.