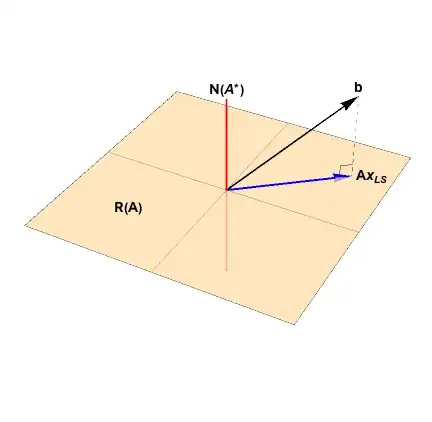

Let $b\in \mathbb{R}^m$,$A\in M_{m\times n}(\mathbb{R})$ with $m>n$ and $rank(A)=n$, and the element $x^*\in \mathbb{R}^m$ solution of least squares of $Ax=b$.

i) Show that $r^*=b-Ax^*\in N(A^T)$ where $N(A)$ denotes the null space.

ii) Find the pseudo-inverse of $A$ and warrants your answer

What I did

Let $z^*=Ax^*\in R(A)$, so by orthogonal decomposition theorem $b=z^*+r^*$ where $z^*\in R(A)$ and $r^*\in N(A^T)$ then $r^*=b-z^*=b-Ax^*$.

But in the part ii) I don't know how to do.