An $m$-dimensional (column) vector $y$ is defined as follows:

$Ay=x+v$,

where $A$ is an $m*n$ matrix with $m<n$ (and full row rank), $x$ is an $m$-dimensional column vector of constants and $v$ is an $m$-dimensional column vector with mean-zero normally distributed elements and diagonal covariance matrix.

If I have understood correctly, if the equation was just $Ay=x$, i.e. without the random vector, it could be approximately solved for $y$ with the pseudoinverse $A^+$ (which here is also the right inverse) such that $y=A^+x$ (approximately).

This works as well for $Ay=x+v$, so that approximately $y=A^+(x+v)$.

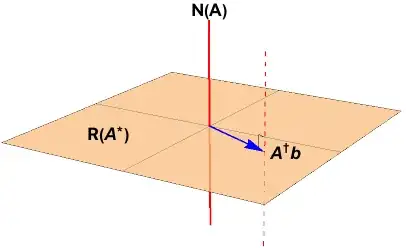

In the case without the random vector, I understand that $A^+Ay$ results in a vector $y'$ which is an approximation of $y$ with the property that the Euclidean norm $|| Ay'-x ||$ cannot be made smaller by using any other vector instead of $y'$.

(see e.g. the introductory part of http://arxiv.org/pdf/1110.6882.pdf)

I.e., introducing the variable inverse $M$, and writing the estimate for $y$ in brackets as a function of $Ay$, the Euclidean norm $|| A(MAy)-x ||$ is minimized if $M$ is set to $M=A^+$.

For the interpretation of the pseudoinverse in the case with the random vector, the Euclidean norm from above can be rewritten with $x+v$ instead of $x$ is:

$|| A(MAy)-(x+v) ||$

As noted by Ian in the comments below, $A^+$ depends entirely on $A$.

($A$ has full row rank, so the pseudoinverse here can be computed as $A^*(AA^*)^{-1}$, http://en.wikipedia.org/wiki/Moore%E2%80%93Penrose_pseudoinverse#Definition, which, if the matrix contains only real numbers as assumed here, becomes $A^T(AA^T)^{-1}$ http://planetmath.org/conjugatetranspose).

So with $A^+$ depending only on $A$, $A^+$ minimizes the Euclidean norm $|| A(A^+Ay)-(x+v) ||$ for every realization of $v$.

The pseudoinverse can therefore in general be interpreted as an inverse that provides the approximation $x'$ for vector $x$ (in a vector-matrix equation $Ax=y$), which minimizes the Euclidean norm of the differences between $y$ and its estimate $y'=Ax'$), and if $y$ is random, this holds for every realization of $y$.

Is this correct?