Quoting Rahul's answer:

It's not hard to show that if the covariance matrix of the original data points $x_i$ was $\Sigma$, the variance of the new data points is just $u^{T}\Sigma u$.

The covariance between sets $X$ and $Y$ is defined as $\sum_i = \frac{1}{n}(x_i-\bar{x})(y_i-\bar{y})$, where $\bar{x}$ and $\bar{y}$ denote the mean.

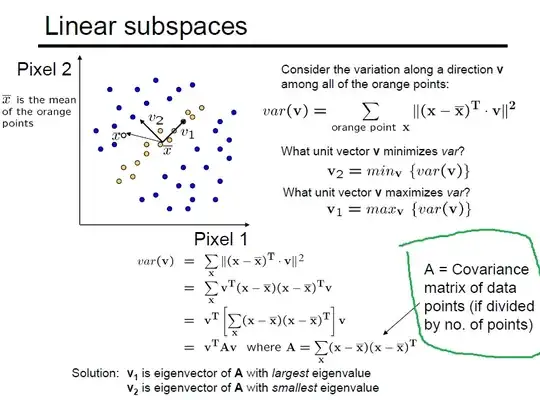

In some other material I've found it says something else than what I've quoted: Here, the variance is not equal to $u^{T}\Sigma u$. It would be if $A$ in this case were multiplied by $\frac{1}{n}$. They say $A$ would be a covariance matrix if the coefficient was present. But it's not. It looks 'a bit' incompatible with the statement in the quote.

The question is - who is wrong here and what is variance equal to? I suppose Rahul is right saying that the variance is equal to $u^{T}\Sigma u$, where $\Sigma$ is the covariance matrix. But the picture below proves a different equality, so what's going on here?

Here on page 8, the author derives the equality supporting Rahul's claim (I can't quite understand what's going on there). Which one is correct?