Projective transformations in general

Projective transformation matrices work on homogeneous coordinates. So the transformation

$$\begin{bmatrix} a & b & c & d \\ e & f & g & h \\ i & j & k & l \\ m & n & o & p \end{bmatrix}\cdot\begin{bmatrix} x \\ y \\ z \\ 1 \end{bmatrix} = \lambda\begin{bmatrix} x' \\ y' \\ z' \\ 1 \end{bmatrix}$$

actually means

$$\begin{bmatrix}x\\y\\z\end{bmatrix}\mapsto\frac1{mx+ny+oz+p}\begin{bmatrix} ax+by+cz+d \\ ex+fy+gz+h \\ ix+jy+kz+l \end{bmatrix}$$

so compared to linear transformations you gain the power to express translation and division.

Just like with linear transformations you get the inverse operation by computing the inverse matrix. Unlike affine transformations, a multiple of the matrix will describe the same operation, so you can in fact compute the adjunct to describe the inverse transformation. Then you plug in the points $(±1, ±1, ±1, 1)$ and apply that inverse transformation in order to obtain the corners of your frustrum in view coordinates.

This post describes how you can find a projective transformation if you know the images of five points in space, no four of which lie on a common plane.

Your perspective transformations

There are two ways to understand your perspective transformations. One is by computing the coordinates of the corners of the frustrum, the other by investigating the structure of the matrix. I'll leave the former as an excercise, and follow the latter.

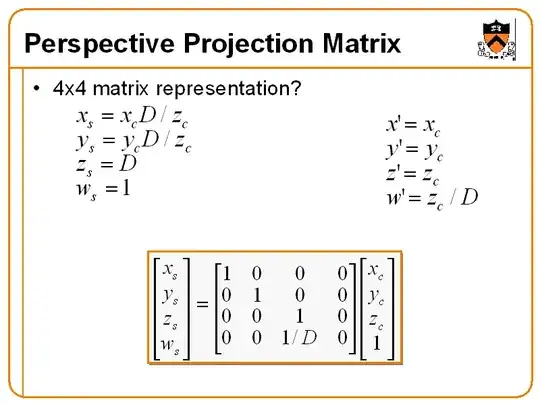

The first example you quote has its geometrix effect already denoted in the upper left corner. You can think about this as two distinct steps. In the first step, the vector $(x,y,z)$ is divided by $z$. This means you're projecting your line of sight onto the plane $z=1$. Afterwards, everything is scaled by $D$ in the $x,y,z$ directions. So you have a plane of fixed depth somewhere in your image space. Which is of little use in case you want to do something like depth comparisons.

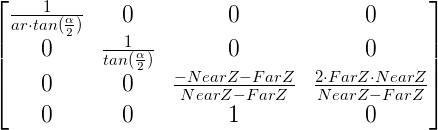

The second example appears to be the standard OpenGL perspective projection, as implemented by gluPerspective, except for some sign changes. This doesn't map everything to the same depth, but instead maps the range between NearZ and FarZ to the interval $[-1,1]$. The new $(x',y')$ coordinates, on the other hand, are essentially the old $(x,y)$ coordinates, divided by $z$ to effect a projection onto the image plane, followed by a scaling which depends on an angle $\alpha$ denoting the field of view. The scaling also takes a factor ar into account, which denotes the aspect ratio.