It is the notation what causes confusion!

If we define

\begin{align*}

\phi\,\colon\, &\mathbb{R}^{n\times n}\to\mathbb{R}\\

&Y\mapsto a^TYb

\end{align*}

it is certainly true that

$$

\frac{\partial\phi}{\partial x_{ij}}(Y) = a_ib_j=(ab^T)_{ij}

$$

irrespectively of whether $Y$ is symmetric or not. This fact is easily verified by observing that $\phi$ is linear and therefore equal to its differential, i.e.,

$$

D\phi(Y) = \phi\qquad\text{or}\qquad

D\phi(Y)(M) = a^TMb\quad (M\in\mathbb{R}^{n\times n})

$$

This allows us to write

$$

\frac{\partial\phi}{\partial x_{ij}}(Y) = D\phi(Y)(E_{ij}) = a^TE_{ij}b = a_ib_j,

$$

which corresponds to your partial derivative w.r.t. $X$. Equivalently

$$

\nabla\phi(Y) = ab^T.

$$

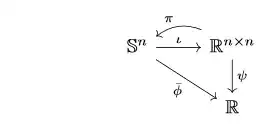

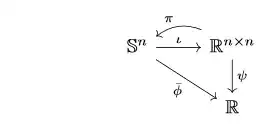

However, there is another interpretation for the same notation, which becomes clear if we name the functions we use. Consider the following diagrams

where $\iota$ is the natural embedding, $\pi$ the projection $\pi(Y)=(1/2)(Y+Y^T)$, $\phi$ is as above, $\bar{\phi}$ is just the restriction of $\phi$ and $\psi$ is given by

$$

\psi(Y) = a^T(Y+Y^T)b/2.

$$

These diagrams aren't equivalent. In fact, the one on the left does not commute with regards to $\pi$, while the one on the right fully commutes.

Now, if we reason as we did above for $\phi$, this time for $\psi$, we get

$$

\frac{\partial\psi}{\partial x_{ij}}(Y) = D\psi(Y)(E_{ij})

= \psi(E_{ij}) = a^T(E_{ij}+E_{ji})b/2 = (a_ib_j+a_jb_i)/2,

$$

and therefore

$$

\nabla\psi(Y) = (ab^T + ba^T)/2.

$$

This explains why there appears to be two different partial derivatives, it is just that we are taking derivatives of two different functions and the (simplified) notation (which disregards function names) cannot distinguish between them.

sym()operator in this case. Rather it is the application ofcsym()which produces a subtle error. – greg Oct 06 '21 at 08:12