Let $U_{k:n}$ be $k$-th order statistics in a uniform sample of size $n$. $U_{k:n}$ is equal in distribution to a beta random variables with parameters $\alpha = k$ and $\beta=n+1-k$, so that

$$

\mathbb{E}\left( \frac{S_k}{S_{n+1}} \right) = \mathbb{E}(U_{k:n}) = \frac{k}{n+1}

$$

Thus we need to find the expectation of random variable $V_n = \max_{k=1}^n \left(U_{k:n} - \frac{k}{n+1} \right)$. Clearly $ \mathbb{P}\left(-\frac{1}{n+1} \leqslant V_n \leqslant \frac{n}{n+1} \right) = 1$.

Let $-\frac{1}{n+1} <z<\frac{n}{n+1}$, and consider

$$ \begin{eqnarray}

\mathbb{P}(V_n \leqslant z) &=& \mathbb{P}\left(\land_{k=1}^n \left(U_{k:n} \leqslant z+\frac{k}{n+1}\right)\right) = F_{U_{1:n}, \ldots, U_{n:n}}\left(z + \frac{1}{n+1},\ldots,z + \frac{n}{n+1} \right) \\

&=& \sum_{\begin{array}{c} s_1 \leqslant s_2 \leqslant \cdots \leqslant s_n \\ s_1+s_2 + \cdots+s_n+s_{n+1} = n \\ s_i \geqslant i \end{array}} \binom{n}{s_1,s_2,\ldots,s_{n+1}}\prod_{k=1}^{n+1} \left(F_U(z_k) - F_U(z_{k-1})\right)^{s_k}

\end{eqnarray}

$$

where $z_i = z + \frac{i}{n+1}$ and $z_0 = 0$ and $z_{n+1} = 1$. This gives an exact law for variable $V_n$.

Computation of the mean can be done as

$$

\mathbb{E}(V_n) = -\frac{1}{n+1} + \int\limits_{-\frac{1}{n+1}}^{\frac{n}{n+1}} \left( 1- \mathbb{P}(V_n \leqslant z) \right) \mathrm{d} z = \frac{n}{n+1} - \int\limits_{-\frac{1}{n+1}}^{\frac{n}{n+1}} \mathbb{P}(V_n \leqslant z) \mathrm{d} z

$$

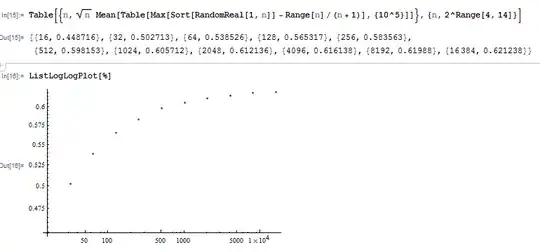

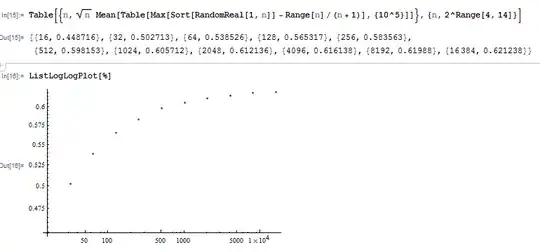

With this it is easy to evaluate moments for low values of $n$:

In[76]:= Table[n/(n + 1) -

Integrate[(

CDF[OrderDistribution[{UniformDistribution[], n}, Range[n]],

z + Range[n]/(n + 1)]) // Simplify, {z, -1/(n+1), n/(

n+1)}], {n, 1, 10}]

Out[76]= {0, 8/81, 129/1024, 2104/15625, 38275/279936, 784356/5764801,

18009033/134217728, 459423728/3486784401, 12913657911/100000000000,

396907517500/3138428376721}

Denominators of the expectation $\mathbb{E}(V_n)$ equal to $(n+1)^{n+2}$.

Exact computations of $\mathbb{E}(V_n)$ for $n$ much higher than $n=10$ is difficult due to bad complexity.

However, simulation is always at hand.