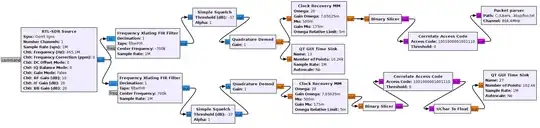

I am currently working on project which utilizes RTL-SDR for capturing packets. I want to capture packets incoming on two different channels. I created the following RF path and it works just fine in terms of capturing packets and converting them into digital form.

However I want to scrap the payloads of those packets. I correlate the SFD and the Correlate Access Code block outputs in strings of zeros and ones, number two whenever SFD is matched. Knowing that I wrote a custom python block with simple if statement which is looking for those number two's. And this is where problem begins. Without my custom packet parser block everything works just fine, but with it in RF path, flowgraph immediately gets overloaded and barely any packets are captured. I have placed the implementation of packet parse block below. Why is this block causing such massive overload? It's a really simple implementation of a block. I know that samle rate is a troublemaker when we deal with overloading but I am not really willing with going down with sample rate any further. Is there a way of allowing GNURadio to use more CPU? GNURadio is using only 13% of my CPU. I am glad to give more however I don't know how. I was also thinking about usage of multithreading - running multiple concurrent loops which would partition and scrap incoming collections of bits.

class blk(gr.sync_block):

def __init__(self, path='somePath', channel='channel'):

gr.sync_block.__init__(

self,

name='Packet parser',

in_sig=[np.byte],

out_sig=[]

)

self.path = path

self.channel = channel

def work(self, input_items, output_items):

for i in range(len(input_items[0])):

if input_items[0][i] == 2:

conn = sqlite3.connect("anotherPath")

timestamp = str(datetime.datetime.now())

input_items[0][i] = 0

somePayloadBitsChanged = self.littleEndianToBig(input_items[0][i:i+64])

conn.execute(f"INSERT INTO Log (Guid,Timestamp,Channel,SomePayloadBitsChanged ) VALUES ('{uuid.uuid4()}', '{timestamp}', '{self.channel}', '{somePayloadBitsChanged } )");

conn.commit()

conn.close()

return 0

def littleEndianToBig(self, somePayloadBits):

try:

array = np.array(somePayloadBits)

array = np.packbits(array, bitorder='big')

somePayloadBitsChanged = array[0]

except:

print("littleEndianToBig error")

return 0

return somePayloadBitsChanged