Real-time rendering, even modern real-time rendering, is a grab-bag of tricks, shortcuts, hacks and approximations.

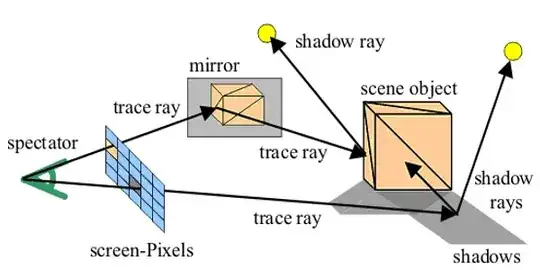

Take shadows for example.

We still don't have a completely accurate & robust mechanism for rendering real-time shadows from an arbitrary number of lights and arbitrarily complex objects. We do have multiple variants on shadow mapping techniques but they all suffer from the well-known problems with shadow maps and even the "fixes" for these are really just a collection of work-arounds and trade-offs (as a rule of thumb if you see the terms "depth bias" or "polygon offset" in anything then it's not a robust technique).

Another example of a technique used by real-time renderers is precalculation. If something (e.g. lighting) is too slow to calculate in real-time (and this can depend on the lighting system you use), we can pre-calculate it and store it out, then we can use the pre-calculated data in real-time for a performance boost, that often comes at the expense of dynamic effects. This is a straight-up memory vs compute tradeoff: memory is often cheap and plentiful, compute is often not, so we burn the extra memory in exchange for a saving on compute.

Offline renderers and modelling tools, on the other hand, tend to focus more on correctness and quality. Also, because they're working with dynamically changing geometry (such as a model as you're building it) they must oftn recalculate things, whereas a real-time renderer would be working with a final version that does not have this requirement.