I have a dataset of 100,000 actual stars. I wish to create a game where I can fly between the stars and fly up to them and click on them etc. How do I go about dealing with the problem of the scaling of the universe, even If I were to allow fast as light travel it would be too vast. Should I cluster my data and then implement a subtle sort of scaling to move around? Sorry for the broad question but I'm rather stuck at the moment.

-

This and this could be of use to you. – Vaillancourt Feb 18 '16 at 17:08

-

1I would recommend breaking the universe up into Galaxies. Use conventions that science already does for splitting up the infinite set of sectors. – Krythic Feb 18 '16 at 17:09

-

Well, for suppose if you set 100,000 stars then how can you simulate among these stars? And again if we suppose that you gonna travel with speed of light then how'd you evaluate any specific single start with that speed? You must use any trick like stereo camera. So just forget to implement that like actual universe. – Hamza Hasan Feb 18 '16 at 17:15

-

2It's unclear to me if you expect an answer from a technical or a gameplay perspective, and if the latter a simulationalist or gameist statpoint. Could you please clarify? – Philipp Feb 18 '16 at 17:22

-

I am apparently misreading your question, so to echo Philipp, are you talking about gameplay or data storage/data usage? Apparently answering to the former causes my answer to be downvoted, so some clarification would be helpful. – dannuic Feb 18 '16 at 19:45

4 Answers

The only real problem you'll be fighting is floating point precision; only 6 digits. When you accumulate floating point error over astronomical distances, you get astronomical inaccuracy. This causes distant bodies to rapidly alternate between two or more places from frame to frame.

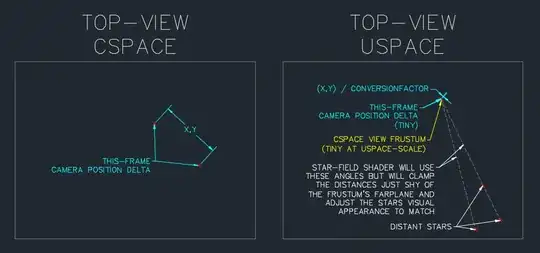

It's likely you'll have two coordinate systems and, in most cases, you'll need to avoid multiplying to move from the least-precise system to the greater.

USpace = universal scale - ok for fixed star coordinates; precision will be lost once during loading

CSpace = "in-system" scale - arbitrary

NO:

CSpacePosition = USpacePosition * ConversionFactor

YES:

USpacePosition = CSpacePosition / ConversionFactor

In other words, don't move your camera around the universe by light-years at a time and attempt to render the changes at a larger scale.

A large change in CSpace is a small, accurate, change in USpace:

Re: comment:

The ViewMatrix is actually just the camera's WorldMatrix, inverted. That means that whichever shader you are currently applying the ViewMatrix in, is already "un-moving" the objects (on the GPU). This is more efficient when you are rendering 1000's of objects with 1000's of WorldMatrices. For a relatively few number of high-detail models, you can afford to "un-apply" the camera's relative movement directly to the objects' WorldMatrix on the CPU, instead of applying it to the ViewMatrix, to be applied later, on the GPU. I'm not sure if there's a name for the technique, but you're really just storing/updating the high-detail models, pre-transformed in View/Camera-Space.

In the first pass, you'll feed in pre-View-Transformed spheres and they'll be rendered normally using whatever shaders are required (tess, glow, particle, etc.).

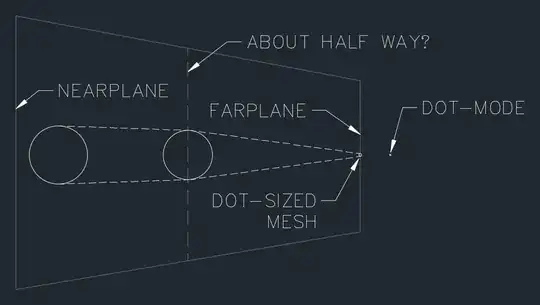

The second pass will feed the star coordinates loaded from your dataset directly to the shader (all 100k). This pass uses the same ViewMatrix with the (0,0) translation component replaced by the camera's USpace-position. The starfield shader does apply the ViewMatrix on the GPU, so the full list only needs to be uploaded once. ViewSpace is centered on the camera at (0,0) so, after the ViewMatrix is applied by the shader, each vertex becomes a direction away from the camera. You can, then, scale each direction vector down so their lengths are just inside the view frustum.

Starfield extra credit:

I doubt a 100k pointlist is going to give your GPU much trouble but, you may be able to increase performance using the GeometryShader. After applying the View matrix, scaling to the farplane, and applying the Projection matrix, all visible stars will have coordinates in the range of -1 to 1, depth 0 to 1. Emit vertices that fall inside those ranges and skip the rest. That's called GPU culling and should allow you to push your vertex count beyond rediculous.

Edit2:

- 3,704

- 1

- 13

- 23

-

-

@user1861013, it is arbitrary. If your camera moves about 10 CSpace-units per real-world-second, and you arbitrarily decide that a 100 USpace-unit journey should take 10 seconds, you can just do the math. We want to move 10 USpace-units per second around the starfield and we are actually "moving the camera" at 10 CSpace-units per second; a conversion factor of 1.0f. If that "feels" too slow, try 1.5f. There's no magic number; it should feel like a long journey, but you don't want the player to get bored and wander off every time they need to FTL. – Jon Feb 27 '16 at 16:03

-

As you approach a planet, lerp ConversionFactor back up to it's normal (very large) value. As you exit mesh-mode, lerp it back down toward the value that "feels right" for FTL. – Jon Feb 27 '16 at 16:12

-

-

@MarsYeti, it will be constant but the actual size is arbitrary. If your FARPLANE is 1000 units away, the first 500 units might shrink objects based on their normal perspective projection. Past 500 units, you'll pre-shrink the object (world matrix) by some factor, then project it as usual. The pre-shrinking is purely visual, the point being to make sure it's dot-sized by the time it is 1000 units away. – Jon Mar 04 '16 at 06:11

-

Check out this video of a ship warping in EVE Online. Notice how the star passed along the way stays in dot-mode, while the destination planet and space station both come into view as dots then expand in size exponentially. When they first come into view as dots, they are at the edge of CSpace. https://www.youtube.com/watch?v=GA3cSnoh-nk – Jon Mar 04 '16 at 06:29

-

Out of curiosity why do you load every single star into the shader and not just search for stars that are inside of the cameras extended frustum and load those stars? – MarsYeti Mar 08 '16 at 19:45

-

@MarsYeti, it is an optimization for your specific case. Your objects are very simple (a single vertex) so the vertex shader can quickly digest the entire list even though we know that most of those vertices will be culled, exactly like you suggest. On the CPU, we'd iterate them one at a time and do a frustum check for each. On the GPU, the vertex shader applies the ViewProjection matrix to all vertices in parallel. The ones that are beyond the viewing frustum are automatically culled on their way to the pixel shader, also in parallel. – Jon Mar 08 '16 at 20:41

-

@MarsYeti, allowing the GPU to process the entire list also means you only upload the list once. If you do culling on the CPU, you'll end up reconstructing a new list, each frame, and then have to spend more time re-re-re-re-uploading it. – Jon Mar 08 '16 at 20:47

Maybe you could divide your universe to allow travel at different levels and then "zoom" in to a more detailed level. I am thinking of concepts like quadrants and sectors or maybe areas of ownership. You could then apply different speed/time implementations for the travel. In a single player game/universe you can speed up time without any difficulties. If this is a multiplayer game then you would probably need to use FTL travel or implement gateways/jumpgates to provide instantaneous travel.

- 31

- 1

Nothing stops you from allowing players to go faster than the speed of light. Take a look at Space Engine(free) or Universe Sandbox to see how they do it.

- 2,813

- 1

- 22

- 26

Allow faster than light travel, or increase the speed of time during travel sections.

- 97

- 5

-

-

1Depends on how you misinterpret the question, I focused on this part: "even If I were to allow fast as light travel it would be too vast." If he's building a game where the distance between stars are similar to the real universe, it would be years of in-game play before reaching another star if he's not allowing faster than light travel or an increased speed of time during travel sections. – Skitskraj Feb 27 '16 at 09:06