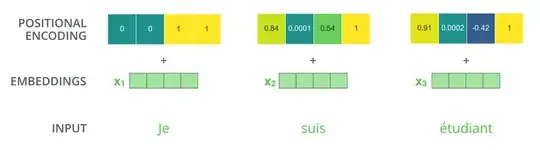

While reviewing the Transformer architecture, I realized something I didn't expect, which is that :

- the positional encoding is summed to the word embeddings

- rather than concatenated to it.

http://jalammar.github.io/images/t/transformer_positional_encoding_example.png

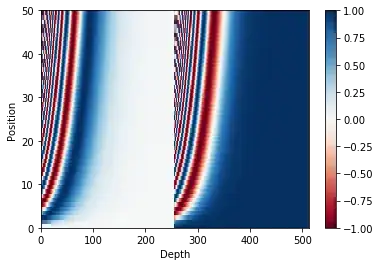

Based on the graphs I have seen wrt what the encoding looks like, that means that :

- the first few bits of the embedding are completely unusable by the network because the position encoding will distort them a lot,

- while there is also a large amount of positions in the embedding that are only slightly affected by the positional encoding (when you move further towards the end).

https://www.tensorflow.org/beta/tutorials/text/transformer_files/output_1kLCla68EloE_1.png

So, why not instead have smaller word embeddings (reduce memory usage) and a smaller positional encoding retaining only the most important bits of the encoding, and instead of summing the positional encoding of words keep it concatenated to word embeddings?

For example: I see in examples '128 weights out of 1024' being used for 4096 context, but log(4096) = 12. So couldn't 16 bits, or just 16 params be enough? I'm unconvinced that models aren't being wasteful.

– aphid Mar 18 '24 at 10:45