Yes in theory the polynomial extension to logistic regression can approximate any arbitrary classification boundary. That is because a polynomial can approximate any function (at least of the types useful to classification problems), and this is proven by the Stone-Weierstrass theorem.

Whether this approximation is practical for all boundary shapes is another matter. You may be better looking for other basis functions (e.g. Fourier series, or radial distance from example points), or other approaches entirely (e.g. SVM) when you suspect a complex boundary shape in feature space. The problem with using high order polynomials is that the number of polynomial features you need to use grows exponentially with degree of the polynomial and number of original features.

You could make a polynomial to classify XOR. $5 - 10 xy$ might be a start if you use $-1$ and $1$ as the binary inputs, this maps input $(x,y)$ to output as follows:

$$(-1,-1): -5 \qquad (-1,1): 5 \qquad (1,-1): 5 \qquad(1, 1): -5$$

Passing that into the logistic function should give you values close enough to 0 and 1.

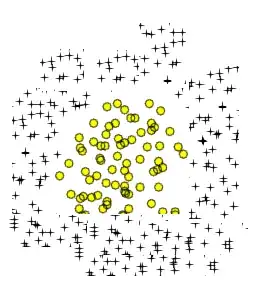

Similar to your two circular areas is a simple figure-of-eight curve:

$$a(x^2 - y^2 - bx^4 + c)$$

where $a, b$ and $c$ are constants. You can get two disjoint closed areas defined in your classifier - on opposite sides of the $y$ axis, by choosing $a, b$ and $c$ appropriately. For example try $a=1,b=0.05,c=-1$ to get a function that clearly separates into two peaks around $x=-3$ and $x=3$:

The plot shown is from an online tool at academo.org, and is for $x^2 - y^2 - 0.05x^4 -1>0$ - the positive class shown as value 1 in the plot above, and is typically where $\frac{1}{1+e^{-z}} > 0.5$ in logistic regression or just $z>0$

An optimiser will find best values, you would just need to use $1, x^2, y^2, x^4$ as your expansion terms (although note these specific terms are limited to matching the same basic shape reflected around the $y$ axis - in practice you would want to have multiple terms up to fourth degree polynomial to find more arbitrary disjoint groups in a classifier).

In fact any problem you can solve with a deep neural network - of any depth - you can solve with a flat structure using linear regression (for regression problems) or logistic regression (for classification problems). It is "just" a matter of finding the right feature expansion. The difference is that neural networks will attempt to discover a working feature expansion directly, whilst feature engineering using polynomials or any other scheme is hard work and not always obvious how to even start: Consider for example how you might create polynomial approximations to what convolutional neural networks do for images? It seems impossible. It is likely to be extremely impractical, too. But it does exist.