I am currently working with a dataset comprising information about crop insurance for soybeans. My ultimate goal with this dataset is to create a classification model capable of predicting whether insurance for soybeans will be activated based on bioclimatic variables. The dataset consists of 267 observations, with 171 crops that did not activate the insurance (Target = 0), and 96 observations where the insurance was activated (Target = 1; also the class I am interested in predicting). As illustrated in Figure 1, there is overlap between the classes.

In Figure 1, "BG" stands for Background. For simplicity, we are not currently addressing it. It appears that class 1 is almost a subset of class 0.

In Figure 1, "BG" stands for Background. For simplicity, we are not currently addressing it. It appears that class 1 is almost a subset of class 0.

Despite attempting to select the best hyperparameters for RF, XGB, and SVM_RBF models, my models performed poorly, even in training with 4 CV folds. I also tried adasyn synthetic sampling to equalize the classes, but my results did not improve. My Youden's J statistic was around 0.1, and the AUC did not exceed 0.6.

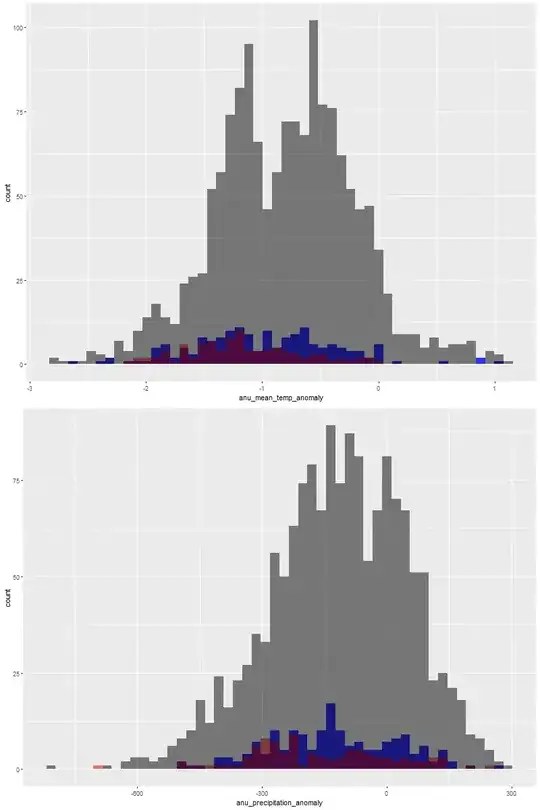

Figure 2 illustrates the same problem of overlap, but with only one of the variables. Black bars represent BG, red represents Target = 0, and blue represents Target = 1.

The variables used for this exercise were chosen based on correlation, VIF, and importance to crop suitability. After reading some papers, I found two potential approaches to address this issue:

Either delete the overlapping data points from the training set and train the model with the remaining data. Create a third class for the overlapping points (e.g., Target=3) and proceed with the classification. What do you think I should do? I understand that there is no single solution to this problem, and I plan to test different approaches. However, I would like to hear your opinions on this matter.

Note: The statistics (AUC and Youden's J statistic) were calculated based on the standard 50% probability cutoff.