Problem Overview

I want to securely store log files so the contents are secret, and they can't be modified without detection.

The files will be encrypted using authenticated encryption (AES in GCM mode), with a random IV and symmetric key for each file. The symmetric key will be encrypted using the public part of an RSA key pair. Both the IV and encrypred symmetric key will be included in the additional authenticated data.

This gives me confidentiality, integrity and authenticity - but only for each individual log file.

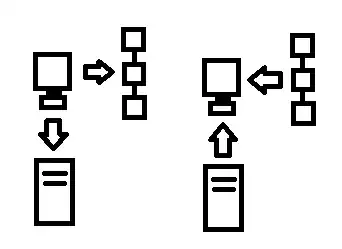

For example, let's say I have log files 2013-01-01.log, 2013-01-05.log and 2013-02-09.log - an attacker could delete 2013-01-05.log without detection.

I've come up with 2 possible solutions.

Possible Solution 1

The program could maintain an encrypted (and possible RSA-signed) 'counter file', which would contain a sequence number that would be incremented every time we write a new log file. The sequence number would become part of the log filename, and would also be included in the additional authenticated data. We could therefore detect any 'gaps' from missing files.

Possible Solution 2

The program could maintain an encrypted (and possible RSA-signed) 'database file', which would contain the filenames of all previously written log files. We could therefore detect any 'gaps' from missing files.

The Question

I'd like feedback on my 2 possible solutions - do they work, do they need some changed, have I missed anything?

Or are there better solutions to my problem?

HMAC(key, filename || contents)? There's no need for a unique key for every file. There's no need for encryption. You can also use something likechattrto set the immutable flag, which can only be removed on reboot. – Stephen Touset Apr 24 '13 at 16:49