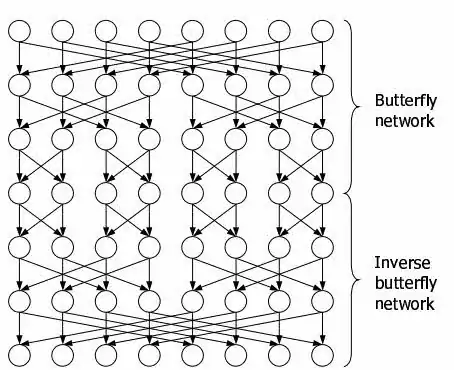

I'm not a math professional and researching an algorithm to provide a good permutation, I found references for Butterfly, Benes and Cross permutations.

But all papers I found are in fact discussing some enhancements and/or CPU mnemonics to these algorithms. I saw a lot of images like this, for BENES:

Since I'm not familiar with some aspects of that kind of image, I would like to understand somethings based on the following image:

1- I can understand the Control-Byte against the Data-Byte to provide a way to swap bits accordingly.

2- YELLOW bit can be ZERO or ONE (it depends of the corresponding Control-Bit). If zeroed, it affects the RED circle; but if ONE, it goes straight to the PURPLE.

3- But CYAN bit also may affect the same bits!

It's weird and confuse!

Let's suppose my CONTROL-BYTE is 0x00001111 or even 11110000. In these cases, I will see YELLOW or CYAN affecting the same result bit (PURPLE or RED).

I tried to research some "basic" math to understand it but I really got no sucess at all!

I would like to make a C# routine (I'm not worried about performance) to provide same schema on BITS, BYTES and even INTEGERS...

Thank you in advance for the patience in explain this to me.

UPDATE

Some links where I found the information above:

Fast Subword Permutation Instructions Using Omega and Flip Network Stages

Efficient Permutation Insctructions for Fast Software Cryptography