I found an authoritative document stating that the ADC’s are certainly only 3 bits. See the ALMA Technical Handbook, https://almascience.nrao.edu/documents-and-tools/cycle7/alma-technical-handbook/view .

From Chapter 5.6.1:

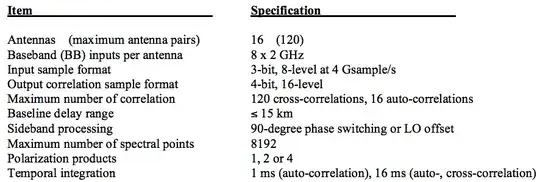

A digitizer adds quantization noise to its input analog signal, with a

consequent signal-to-noise reduction or sensitivity loss. The ALMA

digitizer employs 3-bit (8-level) quantization, and additional

re-quantization processes are applied in the correlators.

One could ask this question a different way, “What would the benefit be for adding additional bits (beyond 3) to ALMA’s ADCs?” You don’t get much higher sensitivity since the 3-bit ADCs are already ~96% efficient (as noted in Ben Barsdell’s excellent answer). You don’t get better angular resolution, since the angular resolution in interferometry is a function of the signal wavelength, distance to the emission source, and antenna location geometry (further distances between antenna pairs increases angular resolution). On the other hand, you get considerable additional computational load. The one good thing you get from adding bits to your ADC is that you can pick up a fainter signal in the presence of noise that would normally saturate your ADC. Hence the statement made by ALMA that they want to observe in frequency bands that aren’t restricted.

I agree that it’s un-intuitive that 3 bit ADCs are sufficient for such an incredible instrument as ALMA. But remember that Nyquist says you might have more data than you think you do:

A bandlimited continuous-time signal can be sampled and perfectly

reconstructed from its samples if the waveform is sampled over twice

as fast as it's highest frequency component.

ALMA can sample at Nyquist (for most radio telescopes it is set to 2.1x of the upper end of the observing frequency window) or twice Nyquist frequency.

The digitized data is the raw data and doesn’t look like it has any information. But after the digitized data is run through an FFT, you get a spectrogram and there is a wealth of information that was in the raw data. Radio Astronomers almost never look at the raw data. The spectrogram gives them the RF signature and emitted power.

When I observed on the GBT, we were looking for gas clouds of formaldehyde near the center of the milky way. When cosmic formaldehyde gets dense enough, it starts to absorb the CMB. We could see the dips in the spectrogram corresponding to the rf quantum shifts in the molecules. Dense formaldehyde clouds are a sign of early star formation. Fun stuff.

Would reproducing a giant emitting Mona Lisa in space with a matlab radio telescope simulator with a low bit ADC convince you?

ALMA has low dynamic range over a single set of observations. So you can observe and detect faint radio emissions (like phosphine on Venus) with sensitivities in the microJansky range, but when you observe and detect powerful radio emissions (like solar radio flares) ALMA’s sensitivities need to be set in the megaJansky range. https://en.wikipedia.org/wiki/Jansky

An astronomer who is privileged enough to use ALMA has to set the sensitivity of the telescope prior to observing. If they set the sensitivity too high, they will saturate the ADCs and not get any usable data. If they set the sensitivity too low, they won’t detect the signal they are looking for! ALMA provides a calculator to help the astronomers: https://almascience.eso.org/proposing/sensitivity-calculator . Note the astronomer can choose sensitivity units from microJanskys to degrees Kelvin (which is about a megaJansky).

The typical way to change the sensitivity of a radio telescope is through the use of an attenuator https://en.wikipedia.org/wiki/Attenuator_(electronics) . If the signal you are observing is saturating your ADCs, you turn up the attenuator until the whole signal waveform is contained. For solar observations, they built specialized attenuators for ALMA, described here: https://digitalcommons.njit.edu/cgi/viewcontent.cgi?article=1223&context=theses .

Because ALMA has low dynamic range for a specified sensitivity, astronomers observing faint signals need to do so when there are no stronger emitters at the same frequency in the same part of the sky. If ALMA had high dynamic range, when Venus passed in front of the sun, perhaps an astronomer would be able to observe the sun’s radio emissions at the same time as observing phosphine radio emissions from Venus that were 12 orders of magnitude less powerful. For now, however, astronomers observing for phosphine on Venus would be well advised to do so at night when there are no other stars or planets nearby!

Finally to answer the title question, ALMA's ADCs are only 3 bits because ALMA does not require a high dynamic range. Instead, astronomers must correctly configure the telescope sensitivity to observe and detect the signals they are interested in.